Oxwall, Part 1: Setting Up

This is the first entry in my Free Software Fix-Up: Oxwall series.

Version Control

The first step I took to start this new software project was to set up a version-controlled repository for it. Version control allows changes to files over time to be tracked, managed, and preserved, providing, for example, the essential ability to roll back to a previous version of code if a recent change introduced a bug. Though version control is often associated with software engineering and code repositories, it can also manage revisions for documents, blog posts (like these!), and more.

Having used it for every other software project I've worked on thus far, I chose Git, the most popular version control system out there (originally authored by Linus Torvalds of Linux fame), for this new project. Beyond Git itself, I also wanted a central location in which to store the code under version control so that I could (a) easily work on this project from multiple machines I use (I'm split between Ubuntu in Windows Subsystem for Linux on Windows 10 and macOS) without having to worry too much about synchronizing changes across those machines and (b) rest assured that my code will not be lost if something were to happen to my machines. While GitHub (a separate entity from Git itself) is quite popular in the industry for hosting repositories, I went with Bitbucket, a roughly equivalent service from Atlassian, the makers of Agile favorites Confluence and Jira; always a seeker of cost efficiency where possible, I've had other personal, private repositories hosted there since before GitHub started allowing non-paying users to create private repositories in 2019. Plus, I'd prefer to wait out the initial tumult of Microsoft-owned GitHub's foray into artificial intelligence, for which it has been accused of scraping users' repository code for its GitHub Copilot tool.

Once I decided on my choices of version control system and repository host, I cloned the original Oxwall repository to one of my local machines, initialized my local Git repository, set its remote origin to the corresponding Bitbucket repository I created simultaneously, and so it began.

Running Locally

In years past, I've hosted Oxwall on several free hosting providers, but I figured that development would proceed more rapidly were I to be able to run Oxwall on my local machine; ideally, I could open a localhost URL in my web browser, view an Oxwall site generated by a combination of a local PHP interpreter, MySQL database and the source code within my local repository, and make code changes that would instantaneously take effect (or, at least, when I refresh the page) in what I interact with in my browser, something like what is referred to more broadly as hot reloading. In other words, all development and testing should never leave my local machine, save for pushing code changes to my Bitbucket repository from time to time.

I've had good experiences with all-in-one local development stacks WAMP (it runs on Windows, Apache is the web server that serves pages to users on the web, a MySQL database provides persistent data storage, and the PHP language provides server-side functionality) and MAMP (same technologies as WAMP but can run on macOS), but I would have to configure the installations on each of my machines separately and ensure that they run the same versions of Apache, MySQL, and PHP for a consistent development experience. Should the installations grow out of sync with one another over the course of this project, I could easily find myself facing the infamous "it works on my machine" problem, in which Oxwall could run without issue on one of my machines but not the other. Additionally, if I were to open this project up in the future, I'd like to document a simple way for others to get started with running their copies locally, agnostic of their local operating systems and configurations.

My natural choice for this purpose, then, was Docker, the leading platform for running containers, lighter-weight counterparts to virtual machines that contain their own operating systems and dependencies and can run on any system that can run the Docker Engine itself. I could write a Dockerfile with instructions for how to build the image based on which containers could run, and Docker would be able to build and run that image in a repeatable, automated manner. A few Oxwall images created by third parties do exist in the Docker Hub image repository, but they're either out of date or purpose-built for others' specific needs as opposed to being generic Oxwall installs that anyone could get started with for any purpose. As a result, I affirmed my choice to write my own Dockerfile and build my own Oxwall Docker image.

All Docker images must be built atop an existing base image. To service PHP- and Apache-based Oxwall, I selected the php:8.3-apache image from the official PHP repository on Docker Hub, which includes an Apache web server that interprets and serves PHP-based pages out of the box.

fpm (FastCGI Process Manager) variant of the PHP base image, which is generally more suited to high-performance workloads than the apache variant I'm using here. The fpm variant does not come with its own built-in web server like Apache, so I spun up an Nginx container - Nginx being an alternative to Apache renowned for its high-performance capabilities - alongside it and connected the two through container networking. However, since Oxwall is designed to run on Apache servers, and seeing how prevalent Apache still is across web hosting providers like those I've used to host Oxwall in the past, I'm sticking with Apache for this project.With some trial and error - building the image, seeing if Oxwall would display any error messages, determining which Linux packages I'd need to install to overcome those errors, rebuilding, and so on - I created the following Dockerfile:

# Pull the base Docker image

FROM php:8.3-apache

# Apply a custom PHP configuration file to enable useful and modern

# PHP features, such as JIT compilation, opcode caching, and

# some error logging

COPY php.ini /usr/local/etc/php/conf.d/

# Install the following Linux, PHP, and Apache dependencies/modules,

# determined through trial and error, to allow Oxwall to run as intended

# These commands could have been split into multiple RUN statements,

# but combining them into one reduces the number of layers (disk space)

# required to store the image, perhaps at the expense of some build-time

# caching capabilities.

RUN apt-get update && apt-get install -y \

cron \

libjpeg-dev \

libfreetype6-dev \

libpng-dev \

libssl-dev \

libonig-dev \

libzip-dev \

libicu-dev \

ssmtp \

zip && \

rm -rf /var/lib/apt/lists/* && \

docker-php-ext-configure gd --with-freetype=/usr --with-jpeg=/usr && \

docker-php-ext-configure intl && \

docker-php-ext-install gd mbstring pdo_mysql zip ftp intl && \

a2enmod rewrite && a2enmod headers

# Run the Apache server when the container is started.

CMD ["apache2-foreground"]

Hot Reloading

If you've worked with Docker before, you might notice that nothing in this Dockerfile copies the Oxwall source files from the local repository into the image; in other words, this image alone essentially represents an empty web server that would probably display a default Apache page instead of the expected Oxwall site when run. Copying the source files into the image (using Docker's COPY command) would require that the image be rebuilt and rerun to see the effects of every code change, which would drastically slow down the development process (even if Docker caches the parts of the build independent of copying the source files, such as installing dependencies, it would still not be ideal).

The solution to this issue was to expose the Oxwall source files to the running PHP/Apache container using a Docker volume to mount the local repository into the container at runtime. A volume would still allow the web server in the container to process and serve the source files as intended but also allow changes to those files made in the repository on the host machine to immediately be visible in the container, so I could view the effects of code changes on my local Oxwall site in my browser upon reloading the page. The concept of hot reloading in other solutions (such as various React utilities for creating user interfaces) often involves the page automatically reloading the page after a code change is made, but what I was able to achieve on its own was substantially more preferable to rebuilding the entire image after every change.

Adding a Database

This Dockerfile only represents the web server and PHP interpreter necessary for displaying the pages of the Oxwall site, but that does not include the MySQL database necessary for persisting site and user data - what good is a social network that can't actually support the creation of users or store their data?

One reasonable option for adding a database to this setup is to install MySQL within the PHP/Apache base image along with everything else already specified in the Dockerfile, but Docker excels with orchestrating multiple containers and abstracting their details from the others (e.g., the PHP/Apache image generally shouldn't have to be concerned with the version and configuration of the database, and vice versa). Because of this and my experience in the cloud with the common practice of separating the database from the web server (referred to as a two- or three-tier architecture), I chose to create a MySQL database as a separate container.

Orchestrating Multiple Containers

Given that running and managing the relationships between multiple containers would increase the operational burden - for instance, just one docker run command would no longer cut it for anything more than one container - I needed a solution to simplify managing two containers that need to work together. Docker's own Docker Compose tool, with its ability to define and orchestrate multiple containers with a YAML configuration file, instantly came to mind. In addition to the Dockerfile for the web server, I created the following Docker Compose file:

services:

# The web server container

oxwall:

# Build and run the previously-created Dockerfile

build:

dockerfile: ./Dockerfile

# Port 80 is often reserved for system use by default in many OSes,

# so the site will be accessible at localhost:8080

ports:

- '8080:80'

networks:

- oxwall

# Mount the local Oxwall repository into this container

volumes:

- .:/var/www/html

# Start the MySQL database, defined below, before the web server

# to optimize how the whole application starts up

depends_on:

- database

# The MySQL database

database:

# No modifications the official MySQL base image are needed

# for this project right now, so pull and use it directly from its source

image: mysql:latest

# The MYSQL_ROOT_PASSWORD environment variable is required to create

# the admnistrative database user and initialize the new MySQL database

environment:

MYSQL_ROOT_PASSWORD: ${OW_MYSQL_ROOT_PASSWORD}

# Expose port 3306, the default MySQL port

ports:

- '3306:3306'

networks:

- oxwall

# Mount the database's data directory, allowing the data to persist

# between starts and stops of the container

volumes:

- ow_mysql:/var/lib/mysql

networks:

oxwall:

volumes:

ow_mysql:

From there, all I had left to do was set the OW_MYSQL_ROOT_PASSWORD environment variable, required to initialize the MySQL container within my local environment, and build and run the entire Oxwall stack with a simple docker compose up.

The Result

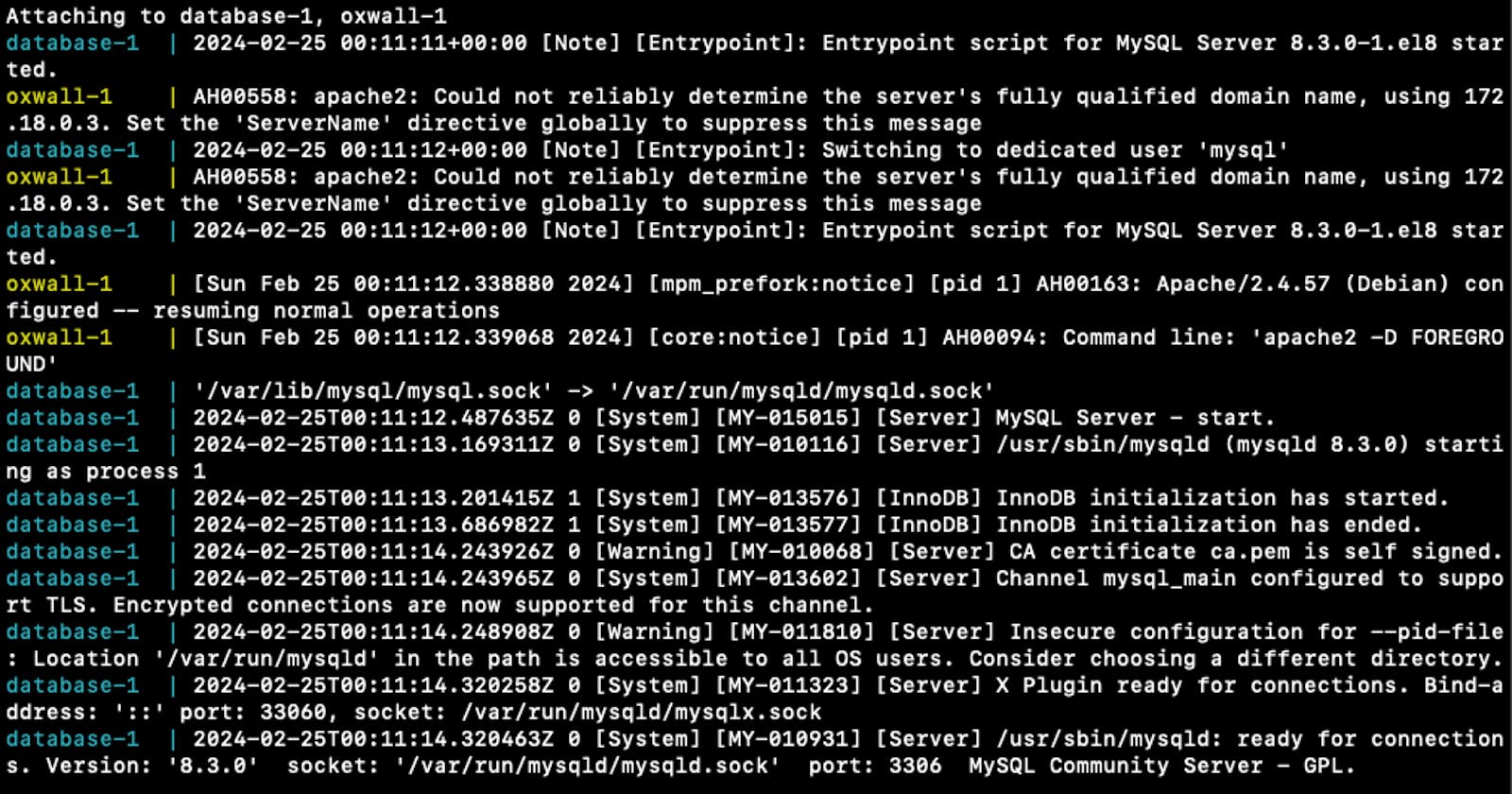

I ran docker compose up, and the database and web server started up as expected. It turns out that the stock Oxwall repository does not include the files necessary to present a user interface for setting up the site - Oxwall calls it the "install" component - for the first time (configuring the name of the site, the connection to the database, etc.), so I saw an obscure PHP error message when I went to localhost:8080 in my browser (which, fortunately, confirmed that the web server was available). I got past that issue by finding the source files for the install UI in another repository maintained by Oxwall and copying those files into my project.

Upon reloading the page, I was redirected to the correct /install path for configuring the site for the first time but received the following PHP error:

Fatal error: Array and string offset access syntax with curly braces is no longer supported in /var/www/html/ow_install/controllers/install.php on line 593

Once upon a time, PHP supported accessing an index of an array with curly braces (arr{0}) instead of the more common square brackets (arr[0]). The curly-brace syntax is present in Oxwall's install interface source files, but it was deprecated in PHP over four years ago and removed entirely from the language almost three years ago.

Looks like I have my work cut out for me.